Continuing with my education in statistics, I'm reading Dalgaard's book, Introductory Statistics with R. The topic of this post is linear regression by least squares.

We're going to model errors in the data as follows:

[UPDATE: this is not the correct way to model the errors. See here.]

The variance of x is computed in the usual way:

The covariance of x and y is defined in such a way that the variance of x is equal to the covariance of x with itself. That makes it easy to remember!

Correlation is just like covariance, only it is computed using z-scores (normalized data).

As discussed in Dalgard (Ch. 5), the estimated slope is:

while the intercept is:

The linear regression line goes through x, y.

Let R do the work:

The estimated slope is 3.64, while the true value is 2.4. I thought the problem was the last point, but it goes deeper than that.

Much more information is available using the "extractor function" summary:

The correlation coefficient is

That is

I am not sure why this doesn't match the output above.

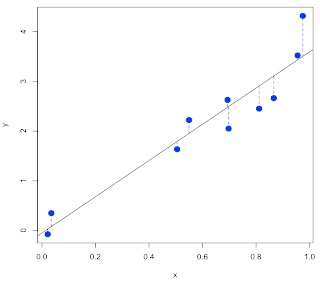

To get the plot shown at the top of the post we use another extractor function on the linear model:

w contains the predicted values of y for each x according to the model.

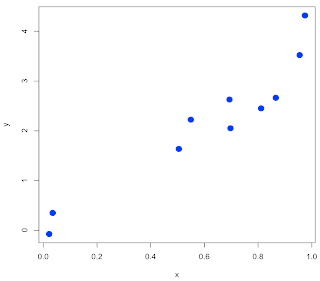

We can do an even fancier plot, with confidence bands, as follows.

This is promising but it is obviously going to take more work.