Recently, I came across some pages about a problem that I simulated in Python years ago, but never solved analytically, although a reader left a hint in the Comments.

Here we start by taking a look at what this author calls "random number blackjack" or the problem of the sum of uniformly distributed random numbers.

For the rest of the post, all the random numbers that we will talk about are given by a random number generator which draws from uniformly distributed real numbers in the range [0,1), i.e. greater than or equal to zero, and less than one.

We also consider the sum of one or more independent values from such a distribution, which forms a different distribution.

the game

On each turn of this unusual game, you draw a random number. You receive $100 for each turn you play, including the last. Suppose you drew 0.45, 0.35, 0.25, on the third turn you would go bust, since the sum of the numbers drawn would be 1.05 > 1.0. The payout would be $300.

And the question is, what is your expected winnings if you play the game many times? Is it worth it to play (accepting some risk of bad luck) if the initial buy-in requires $250?

initial thoughts

First of all, since the generator always gives a number less than 1.0, you always play at least two turns. The expected value (mean) of the first number is 0.5. For a continuous distribution, the mean has a technical definition, but it is always on the axis of symmetry of the distribution, which is obviously 0.5 here.

The expected value of the sum of several random numbers is the sum of their expectations.

So, for example, E[3] = 1.5. Most of the time we will quit after two or three turns, but there will occasionally be an extended run of smaller numbers and a corresponding increase in the number of turns.

[spoiler alert, you may want to look at random number blackjack to try to work out the answer]

What famous number do we know that lies between two and three? This is so trivial to simulate in Python I won't even post an example.

On one run I got 2.718531.

So it looks like the result is equal to e. (The internet says that adding more rounds doesn't help the accuracy because of limitations in the random number generator).

I came across this problem in a slightly different form in the terrific introductory calculus book, Calculus Made Easy, which was originally written by Sylvanus P. Thompson (and available as a Project Gutenberg download).

It was added by Martin Gardner when he edited the classic work (1998 ed., p. 153) and is simply a note about how e shows up everywhere.

But the problem is at least as old as Feller's classic text Probability (which I don't have, unfortunately).

related problem

Tim Black solves a related problem (here).

Recall that for a standard (fair) die or dice, the expected value of a throw is the sum of each value times the probability that it will occur. For a single die, the average is (1 + ... 6).1/6 = 21/6 or 3.5.

For two dice, we have

1(2) + 2(3) + ... + 6(7) + ... + 2(11) + 1(12)

The distribution is no longer uniform, but it is still symmetric around the value of 7, which is the mean. The expected values from adding two random draws from a uniform distribution are observed to add, but the resulting distribution is no longer uniform.

Suppose we know a probability distribution for the sum of n random numbers, for some value of n, and then calculate the probability that the sum is greater than or equal to 1. We can then obtain the expected value over a number of trials as that value n times the probability we calculated.

The probability distribution for the sum of two random numbers from the generator has a mean of 1.0, so the probability of exceeding 1.0 is 0.5. That event has a weight of 2, so the contribution to the total expected value for a number of trials is 2(0.5) = 1. So, in the same way as we did for the dice, we have that P(2) = 0.5.

We also have that P(1) = 1. That's another 1 to add to the expected value overall.

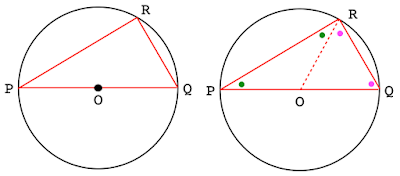

So now, what is the probability distribution for the sum of three random numbers? That gets a little trickier. The difficulty is that the probability distribution changes as n changes. Eventually, it becomes normal, but how different is it for small n like 3, 4, or 5?

Here is where our analyst has a great idea.

Imagine that we change the game slightly. We still have $100 as the payout at each stage.

From a stream of random numbers, as the numbers are drawn we write into another stream the sum at each stage. So in the example above we would get 0, 0.45, 0.80, and then at the third draw the sum is 1.05. Rather than write the last value, subtract 1 first, then write that down and keep going.

Notice that over this stretch we have a valid game, a sequence of increasing values followed by one that must be smaller than the last.

The values are

0 0.45 0.80 0.05

The values are in ascending order until the last one, which might be anything smaller than 0.80. This must be true for a single round from the game according to the rules we have set up.

Since there are n! ways of arranging n values, and only one of those arrangements has the numbers in strictly ascending order, the probability of the event (for a random uniform distribution) is 1/n!. In other words, starting at the beginning of a stream of random numbers

the probability of obtaining a result of 1 is 1.

the probability of obtaining a result of 2 is 1/2!.

the probability of obtaining a result of 3 is 1/3!.

Multiplying the value of each result by its probability we end up with 1 + 1 + 1/2!. The expected value of a series of games is the sum of all the possibilities.

E = sum 1/n!

This is just the infinite series for e.

simulations

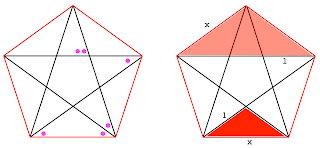

I wrote two simulations to show results relevant to this problem. The first one shows the distribution of sums of n = 1, 2, 3 or 4 random numbers. As you can see from the figure

even 3 at a time, the sums look pretty close to a normal distribution. The Central Limit Theorem says that they will tend to normal, and there is a bunch of theory that I don't understand that says if the draws are from a uniform distribution then the convergence is very rapid.

I got curious about this alternate game, so I wrote a simulation which shows that the sum of random numbers, when computed as above, by discarding the whole numbers from the result, appears to be still random uniform. (gist here). The original data and the summed series are plotted in the same hisotgram with transparency 0.5. The new data is random uniform or close to it.

I don't know what the theoretical explanation for this is. However, if it's true, then rather than do the sums, we can just draw from the random uniform distribution, and tally up the runs where all the values are increasing, until the last one If we do the bookkeeping correctly, we get e as the result.

That means the original problem has the same as the alternative one.

serious analysis

I have reworked what is on the Mathworld page as follows:

That's where I am so far. There's plenty more to investigate.

The sum of two random numbers from a uniform distribution has a distribution that is given by convolution of the individual distributions. But then each distribution for n > 2 is formed by another convolution. Ultimately, the distributions tend to the normal.

I don't see how you get to something as simple as 1 - 1/n! from that, although Tim Black gave us a different path above, which is why I wrote this post.

[Update: I've been dense. The "different path" is in fact the means by which the integral is evaluated. It is not done by writing some complex expression and then seeking the antiderivative and evaluating it. Instead, we know that the value for the cumulative distribution function at the upper bound must be 1, and at the lower bound it must be 1/n!. ]

There is a suggestion that this sort of thing is done more easily with generating or characteristic functions.

Probably the first, simple thing would be to run the simulation using random numbers and not bother with the sum part, as we also looked at here. [Update: the result is as I suspected. See gist. If we simply find the length of runs in a stream of random numbers from a uniform distribution, where they are in increasing order, and then find the mean of those lengths, the result is e.]